LLM

-

2025-11aiday25-hzBest practice of Blackwell GPU deployment in the Chinese market

2025-11aiday25-hzBest practice of Blackwell GPU deployment in the Chinese market何平, NVIDIA TensorRT团队 高级工程师 张一林, NVIDIA GPU加速计算专家团队 高级工程师 AI Open Day 20251107

-

2025-11aiday25-hzTensorRT-LLM Large-scale Expert Parallelism Optimizations

2025-11aiday25-hzTensorRT-LLM Large-scale Expert Parallelism OptimizationsEnwei Zhu (朱恩伟), NVIDIA 加速计算专家团队 高级工程师 Jinyang Yuan (袁劲飏), NVIDIA 加速计算专家团队 高级工程师

-

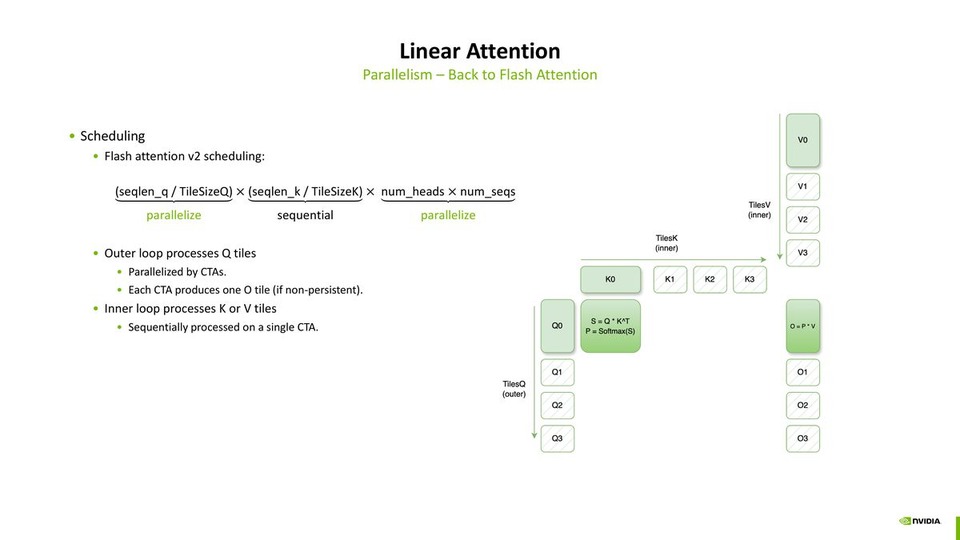

2025-11aiday25-hzLinear Attention

2025-11aiday25-hzLinear Attention韩广云,NVIDIA GPU 加速计算专家团队 高级工程师 | AI Open Day/2025-11-07

-

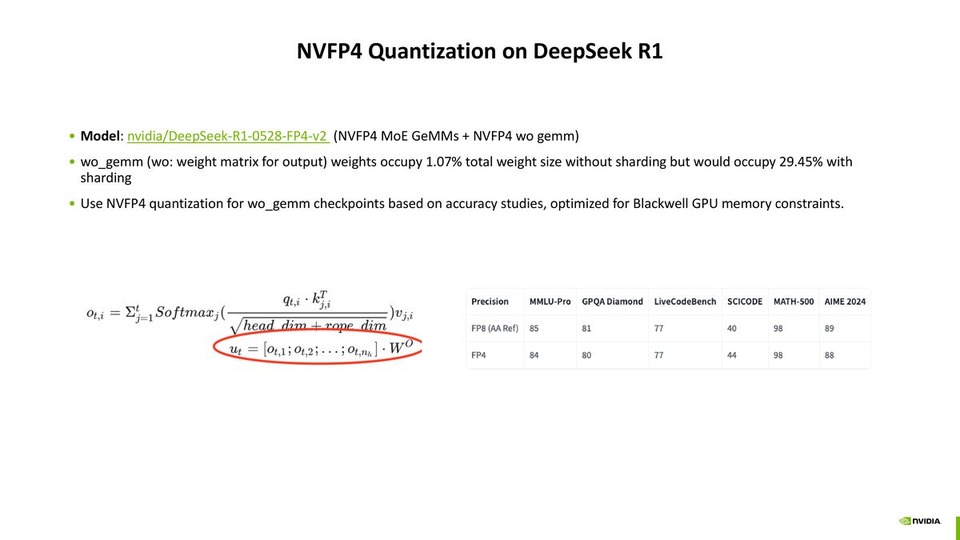

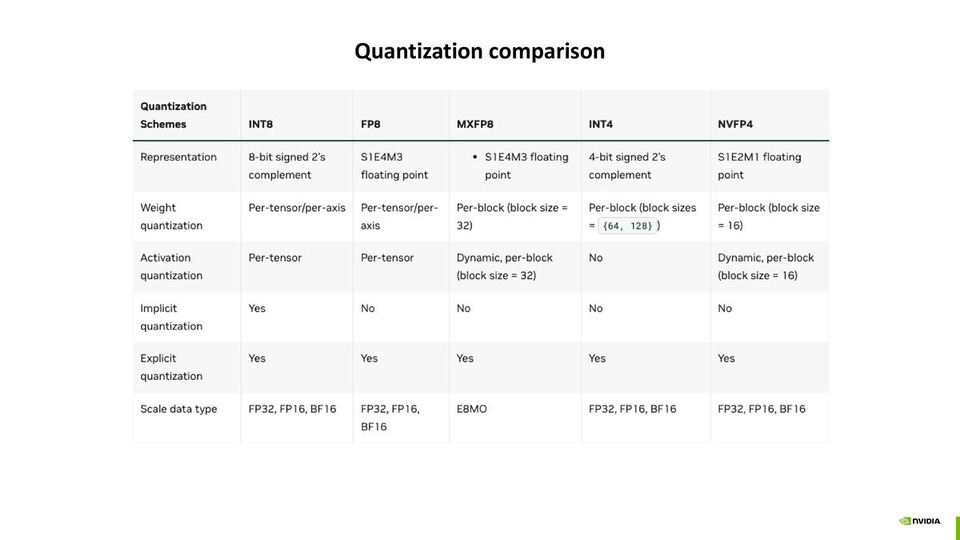

2025-11aiday25-hzA Practical Guide to Deploying NVFP4 for Efficient Inference on Blackwell GPUs

2025-11aiday25-hzA Practical Guide to Deploying NVFP4 for Efficient Inference on Blackwell GPUs薛博阳, NVIDIA 加速计算专家团队 高级工程师 2025/11/07

-

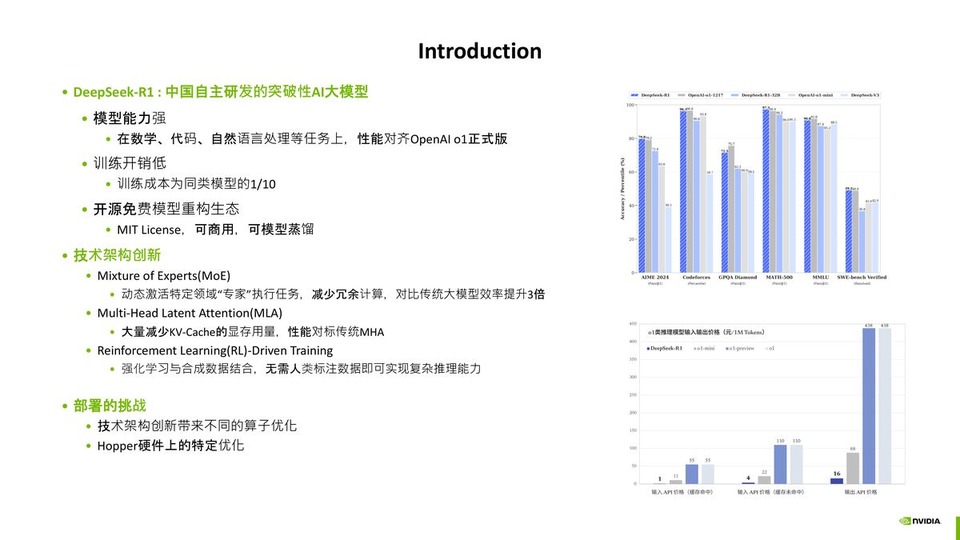

2025-05aiday25-bjTensorRT-LLM驱动DeepSeek性能极限-协同腾讯联合优化实践

2025-05aiday25-bjTensorRT-LLM驱动DeepSeek性能极限-协同腾讯联合优化实践Raccoon Liu : 腾讯大模型推理负责人 朱文熙 : 腾讯开悟平台研发负责人 王猛 : NVIDIA 高级加速计算专家

-

2025-05aiday25-bjTensorRT-LLM × PyTorch: A New Development Paradigm for High-Performance LLM Inference

2025-05aiday25-bjTensorRT-LLM × PyTorch: A New Development Paradigm for High-Performance LLM Inference更多示例和参数: 更多带有附加参数的示例可在 examples/pytorch/quickstart_advanced.py 中找到。

-

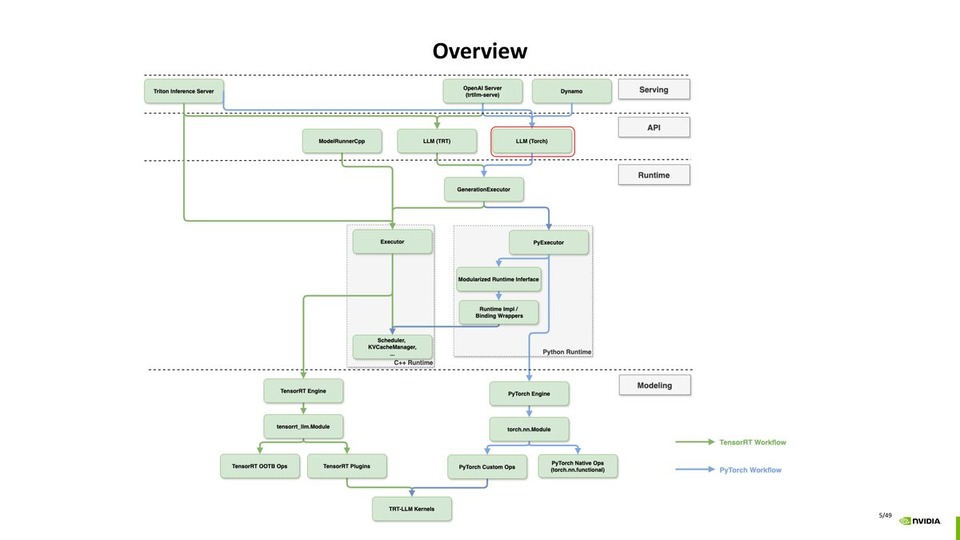

2025-05aiday25-bjTensorRT-LLM

2025-05aiday25-bjTensorRT-LLMTensorRT LLM 旨在帮助用户在 NVIDIA AI 平台上获得大型语言模型(LLM)推理部署的最佳性能。

-

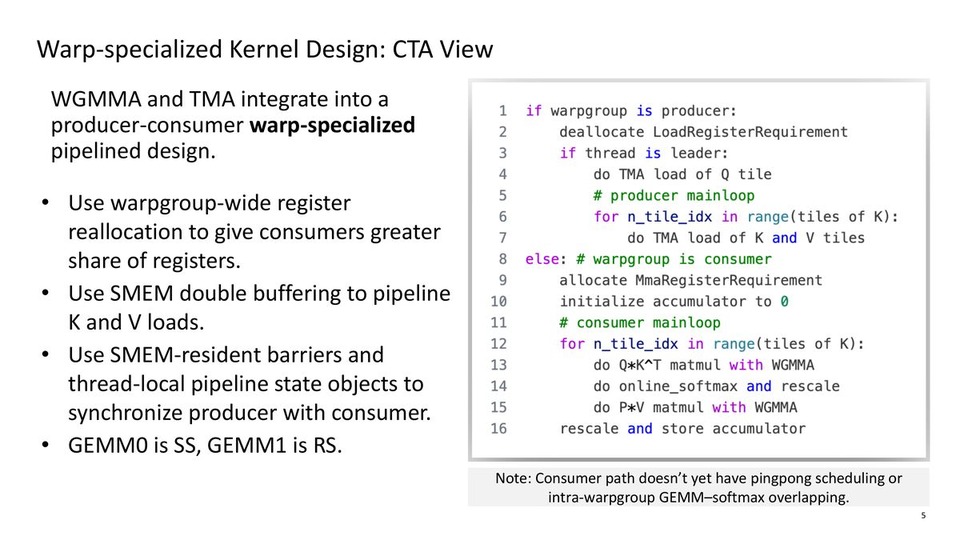

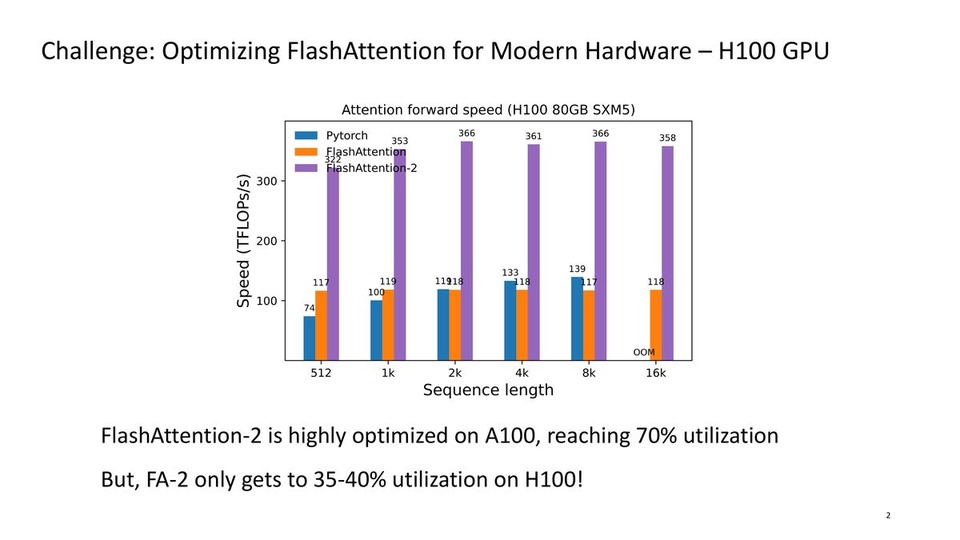

2025-03gtc25FlashAttention-3: Fast and Accurate Attention with Asynchrony and Low-precision

2025-03gtc25FlashAttention-3: Fast and Accurate Attention with Asynchrony and Low-precisionTri Dao¹ and Jay Shah² ¹ Together AI / Princeton University, tri@tridao.me ² ...

-

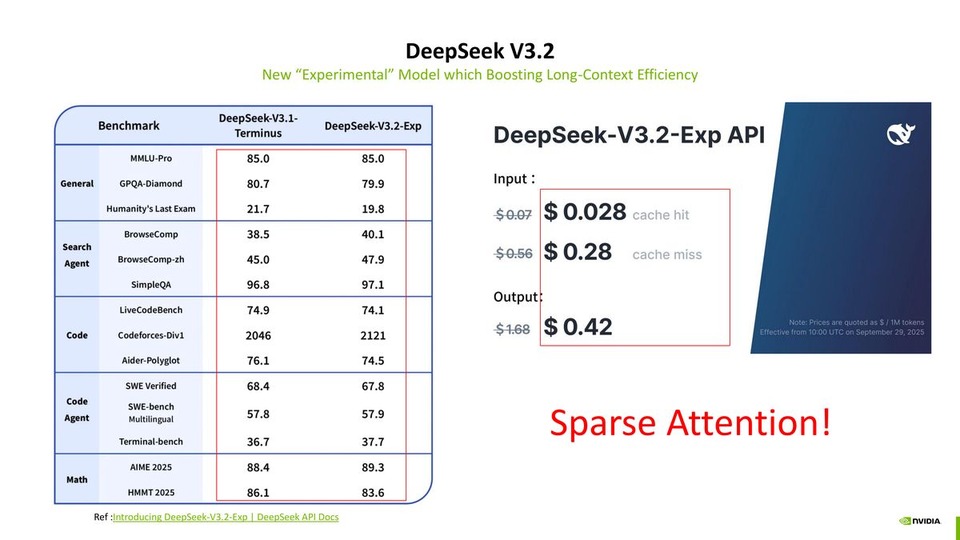

2025-11aiday25-hzMegatron Core MoE Updates - 2025 H2

2025-11aiday25-hzMegatron Core MoE Updates - 2025 H2颜子杰, 陈楷文 | NVIDIA GPU加速计算专家团队 | Nov 07, 2025

-

2025-11aiday25-hzDistributed Implementation of Muon and Emerging Optimizers in Megatron-Core

2025-11aiday25-hzDistributed Implementation of Muon and Emerging Optimizers in Megatron-Core傅德禹, NVIDIA GPU 加速计算专家团队 | Al Open Day | Nov 07, 2025

-

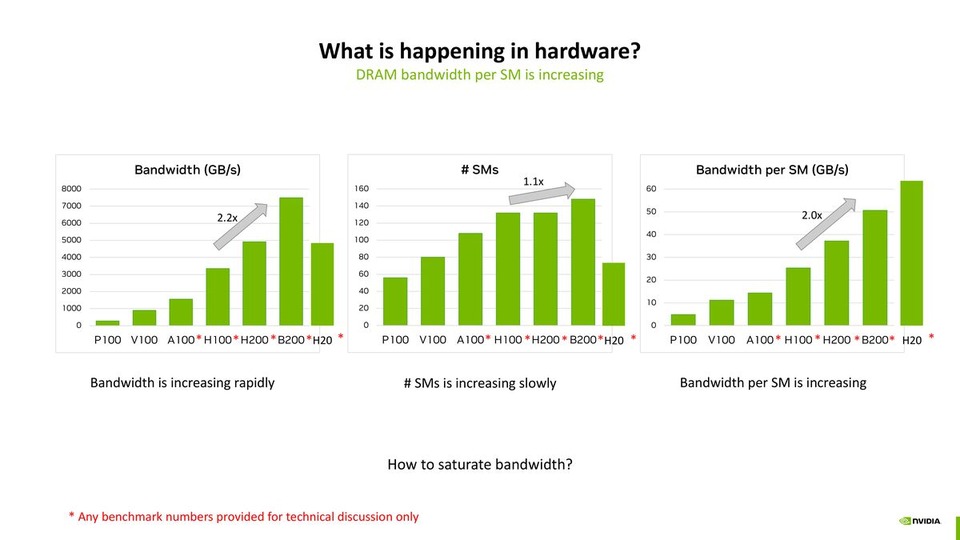

2025-11aiday25-hzBest Practice of MLA Kernel Optimization on Blackwell

2025-11aiday25-hzBest Practice of MLA Kernel Optimization on Blackwell王泽宇, NVIDIA GPU加速计算专家团队 高级工程师 | November 7, 2025

-

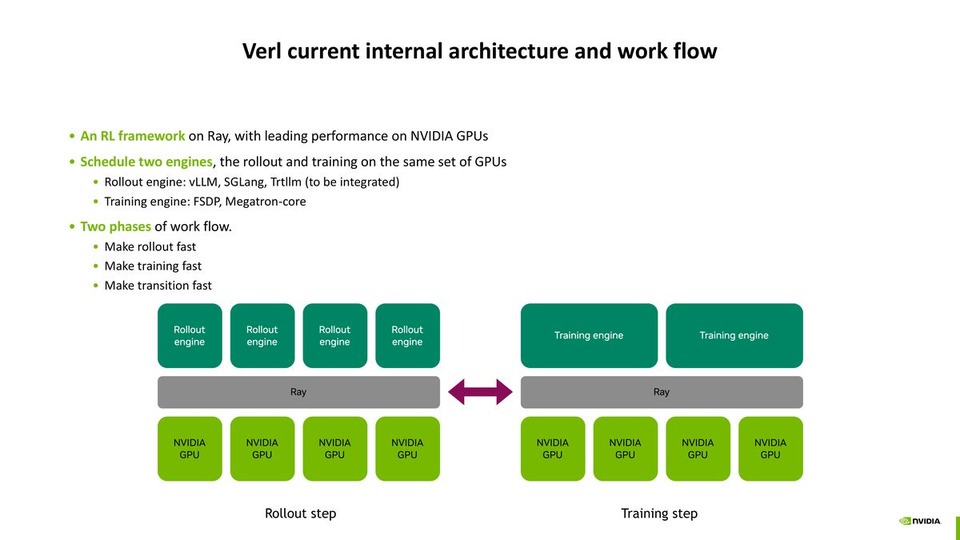

2025-11aiday25-hzBest Practices of Reinforcement Learning with verl

2025-11aiday25-hzBest Practices of Reinforcement Learning with verlLiwei Ma, Yan Bai, DevTech China | NVIDIA AI Open Day, Nov. 7th, 2025

-

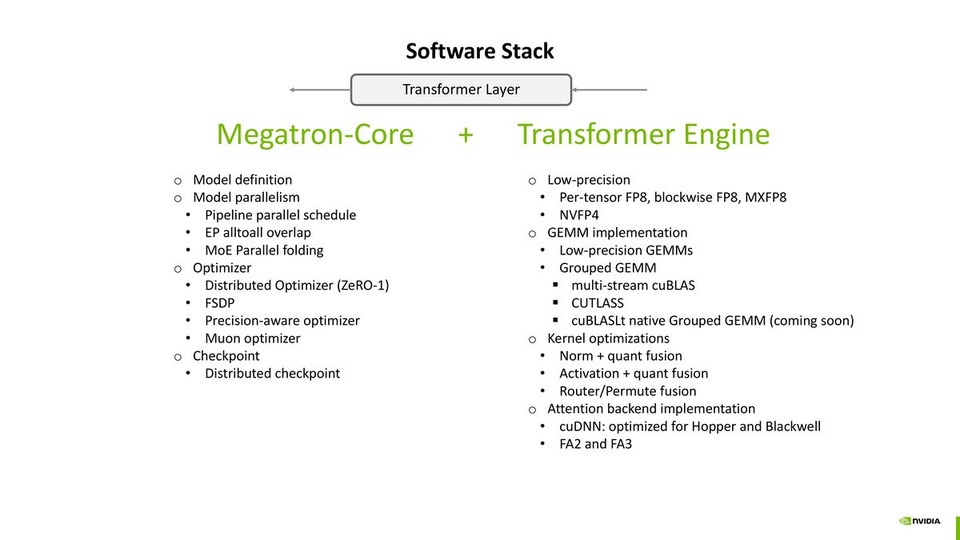

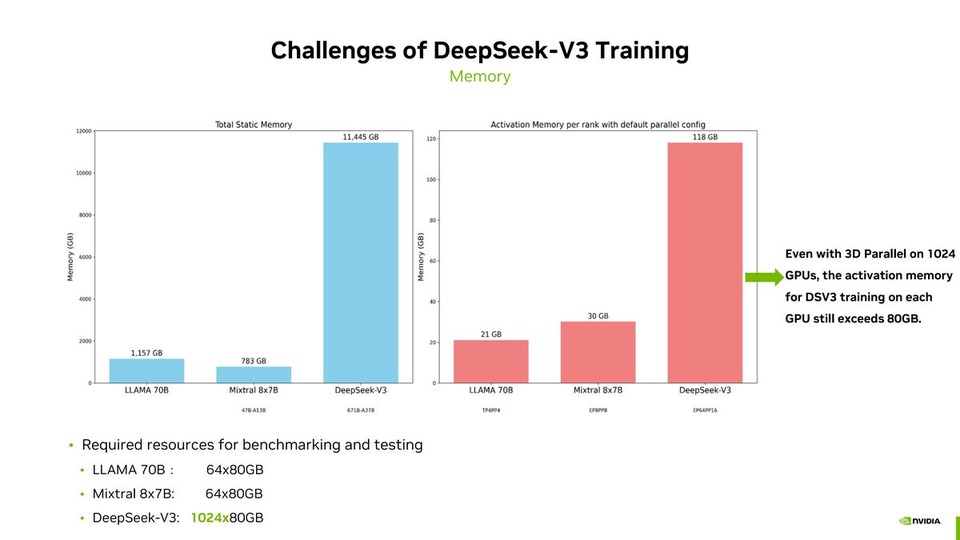

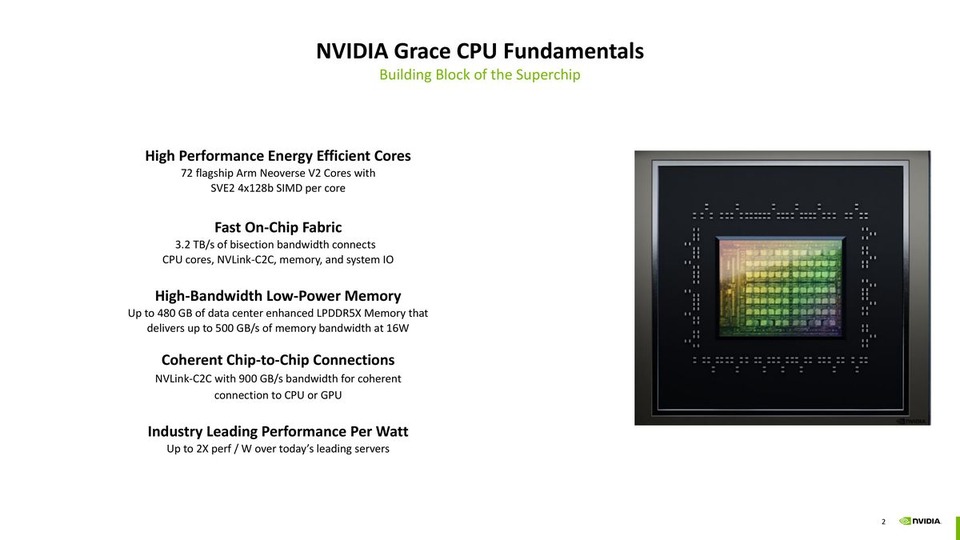

2025-11aiday25-hzDeepSeek-V3 Pre-training Optimization on Grace Blackwell

2025-11aiday25-hzDeepSeek-V3 Pre-training Optimization on Grace Blackwell姚鑫 | NVIDIA GPU加速计算专家团队 高级工程师 | NVIDIA AI Open Day, Nov. 7th, 2025

-

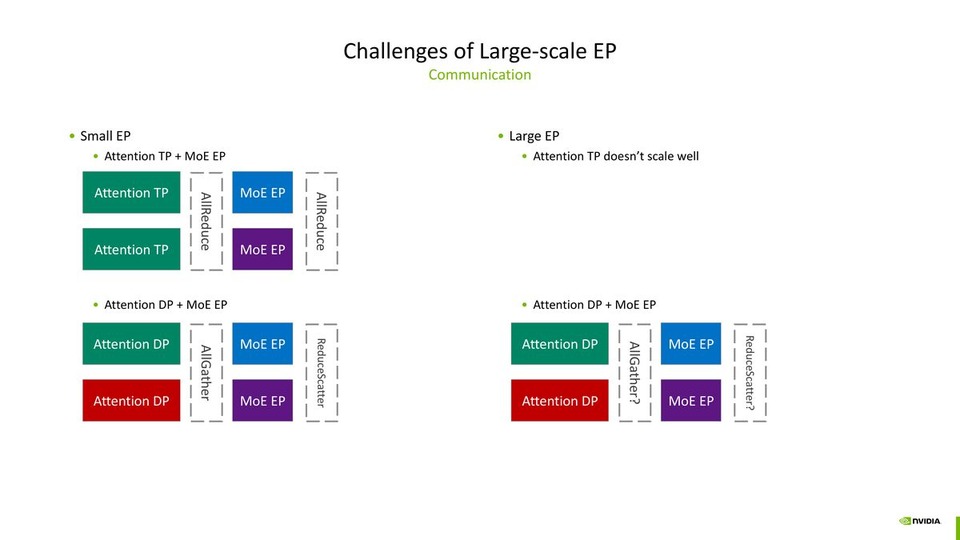

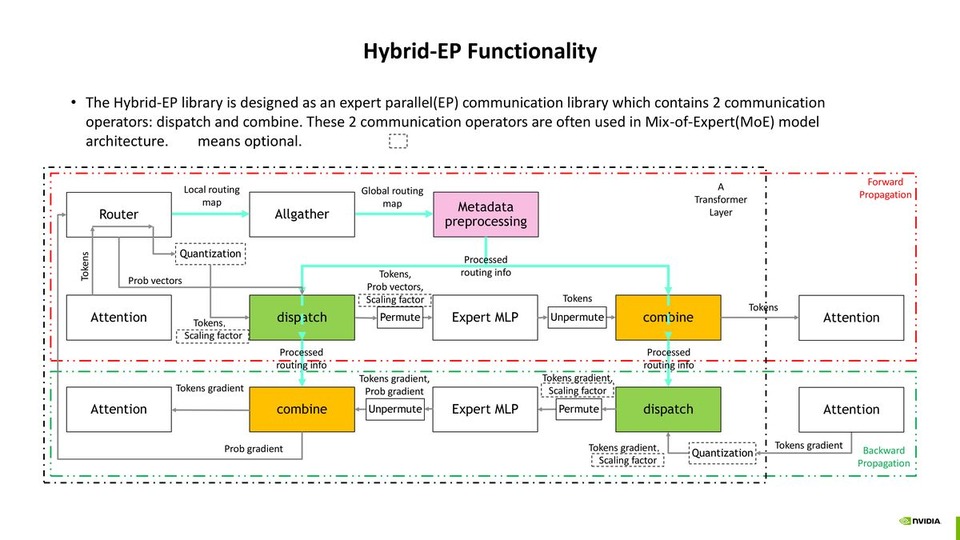

2025-11aiday25-hzHybrid-EP: An Efficient MoE Communication Implementation

2025-11aiday25-hzHybrid-EP: An Efficient MoE Communication Implementation郁凡, 刘童, NVIDIA GPU加速计算专家团队, 高级工程师 | NVIDIA AI Open Day, Nov. 7th, 2025

-

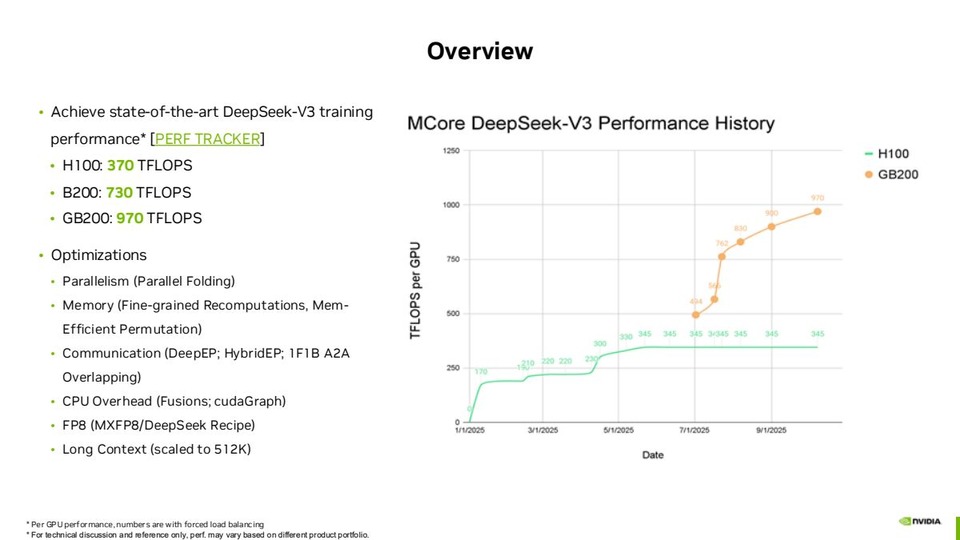

2025-05aiday25-bjMCore MoE in 2025 - DeepSeek-V3 and Beyond

2025-05aiday25-bjMCore MoE in 2025 - DeepSeek-V3 and BeyondZijie Yan and Hongbin Liu NVIDIA

-

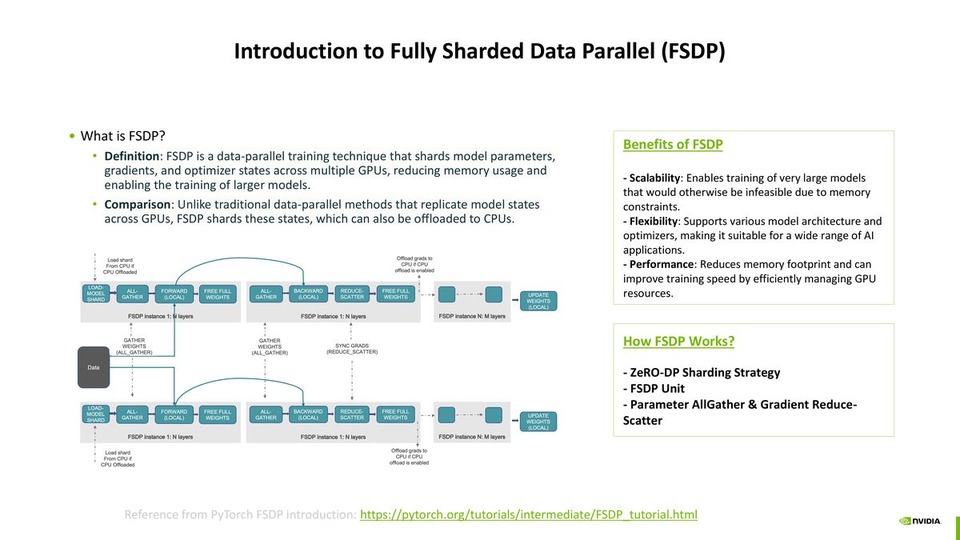

2025-05aiday25-bjMegatron-Core Custom FSDP

2025-05aiday25-bjMegatron-Core Custom FSDP全分片数据并行(FSDP)简介 - FSDP 工作原理:ZeRO-DP 分片策略 - FSDP 工作原理:FSDP 单元 ...

-

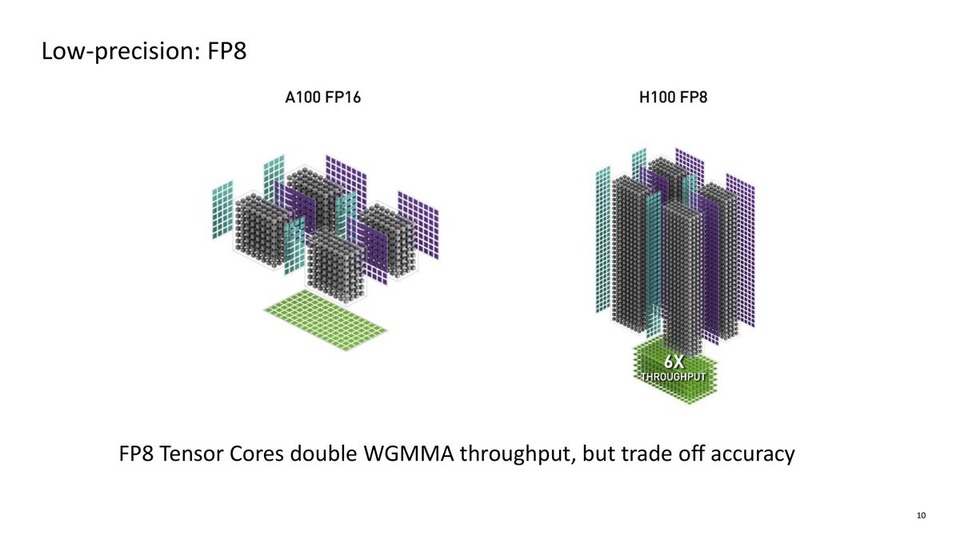

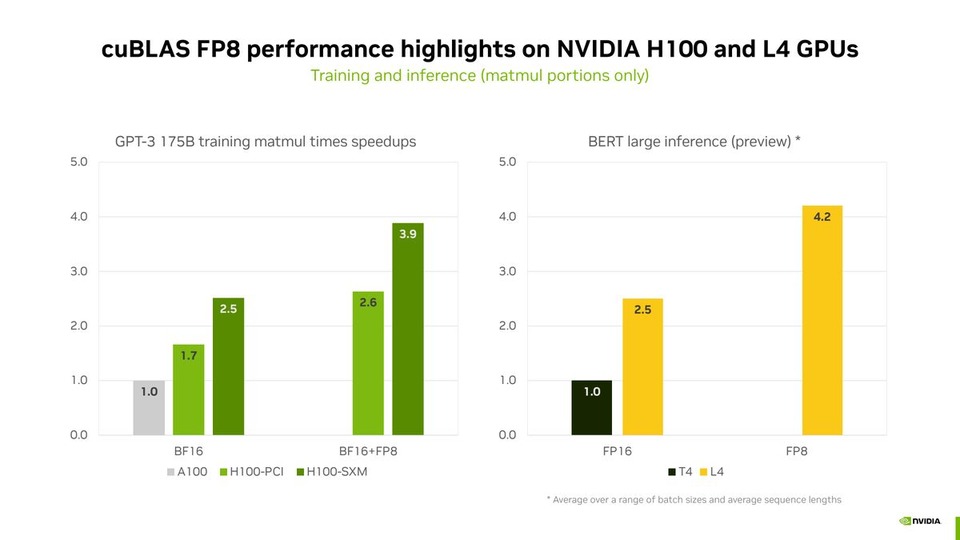

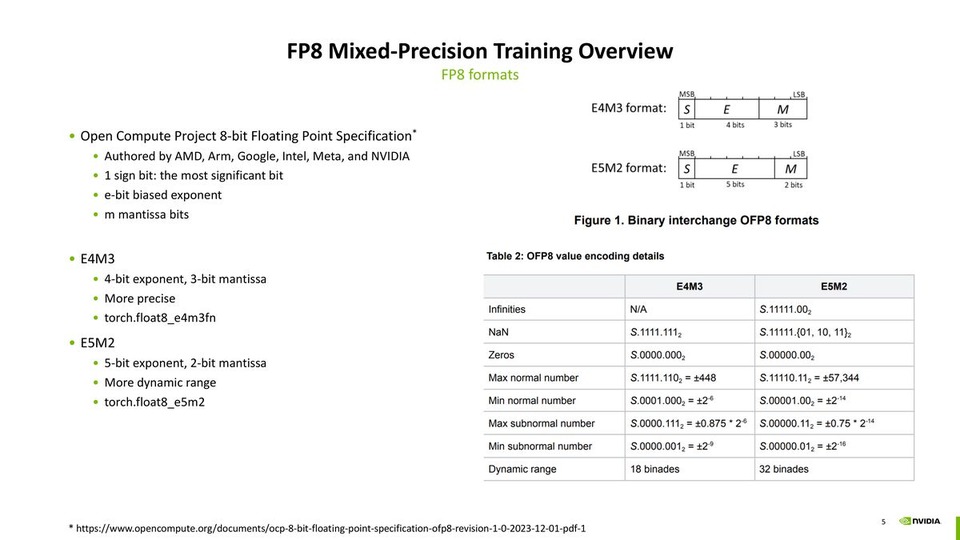

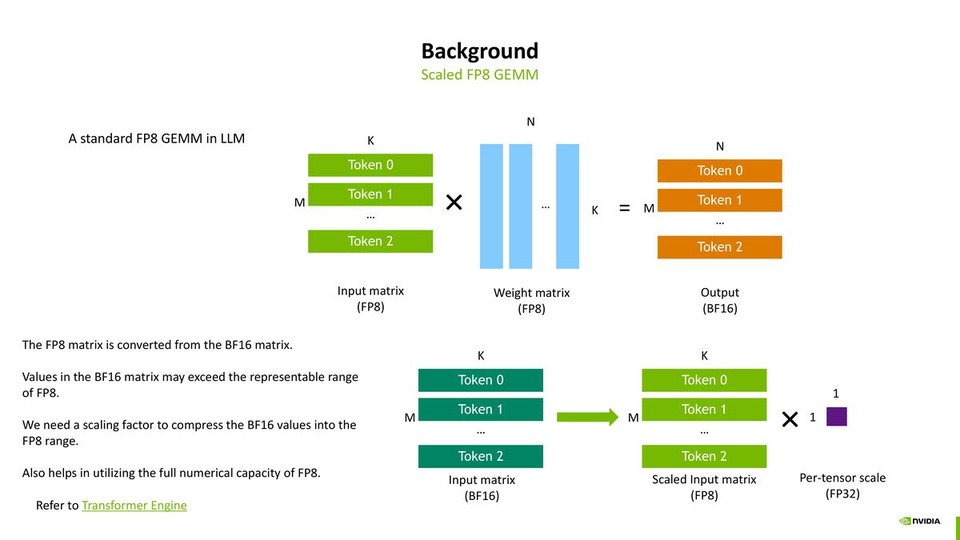

2025-05aiday25-bjFP8 Training Recipes, Performance and Convergence

2025-05aiday25-bjFP8 Training Recipes, Performance and ConvergenceXin Yao, DevTech | AI Open Day/May 30, 2025

-

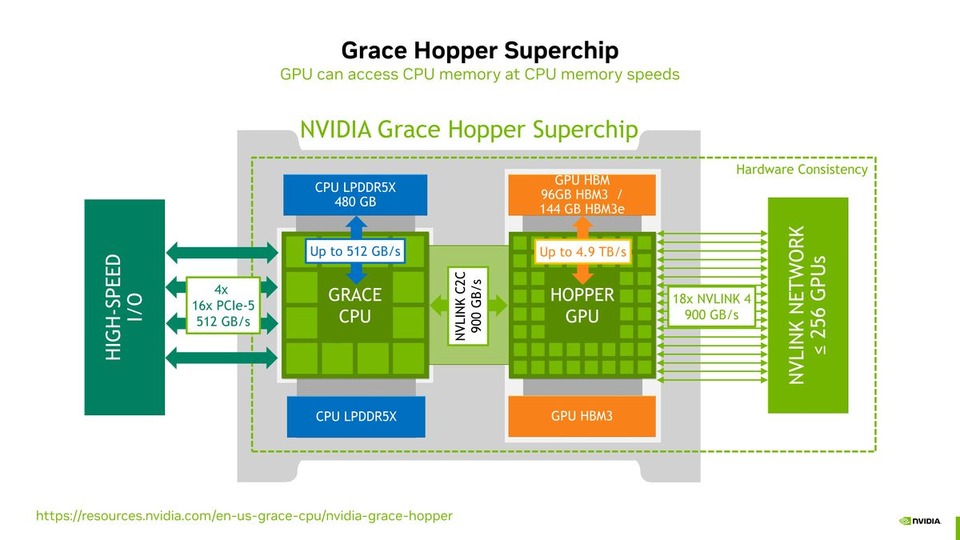

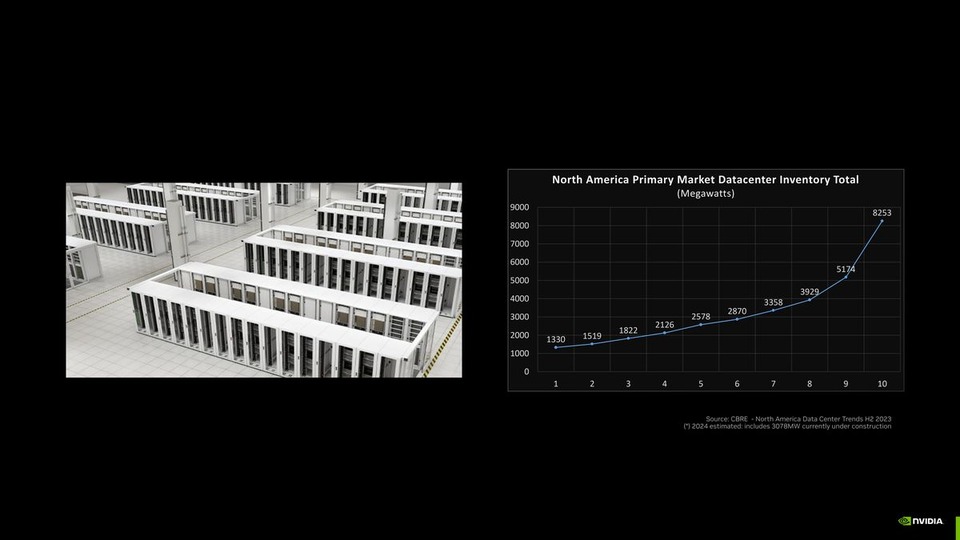

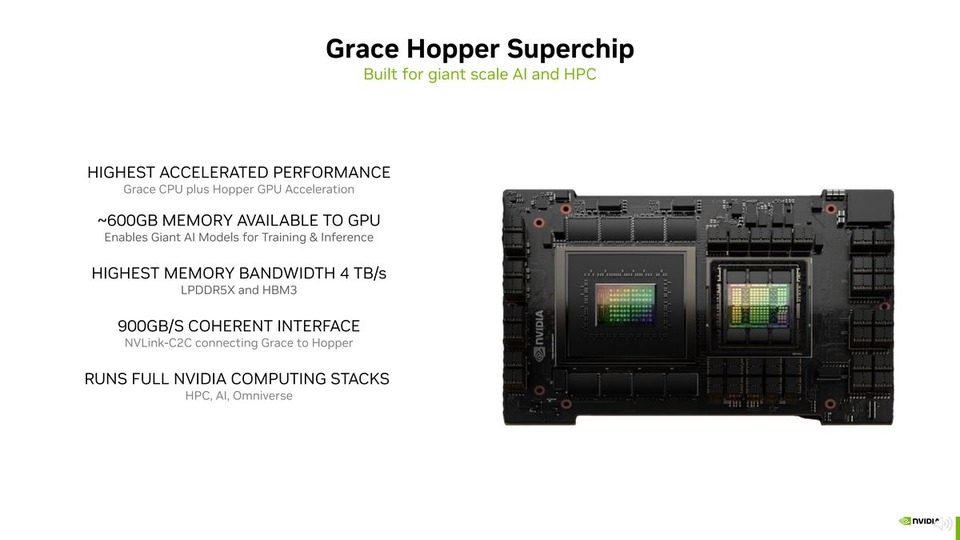

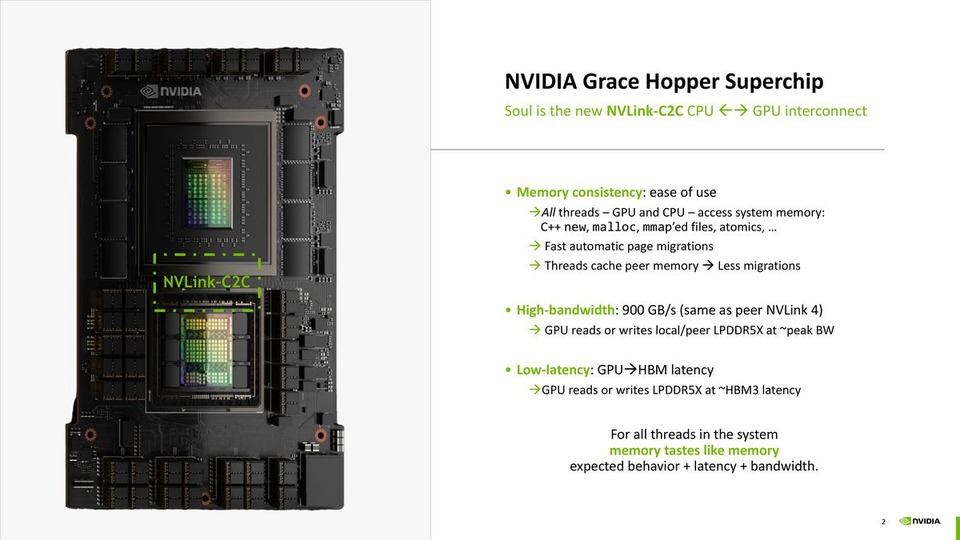

2025-03gtc25Profiling Large Language Model Trainings on the Grace Hopper Superchip using Nsight Systems

2025-03gtc25Profiling Large Language Model Trainings on the Grace Hopper Superchip using Nsight SystemsKarin Sevegnani, Senior Solutions Architect, NVAITC UK | GTC2025 Giuseppe Fia...

![thumbnail of CUDA Techniques to Maximize Compute and Instruction Throughput [S72685]](/papercache/assets/data/01b087cb.jpg)

![thumbnail of CUDA Techniques to Maximize Memory Bandwidth and Hide Latency [S72683]](/papercache/assets/data/188a47b3.jpg)

![thumbnail of Performance Optimization Tutorial, Part 3 [S72686]: CUDA Techniques to Maximize Concurrency and System Utilization](/papercache/assets/data/aab1851f.jpg)

![thumbnail of Advanced Performance Optimization in CUDA [S62192]](/papercache/assets/data/e0458e86.jpg)